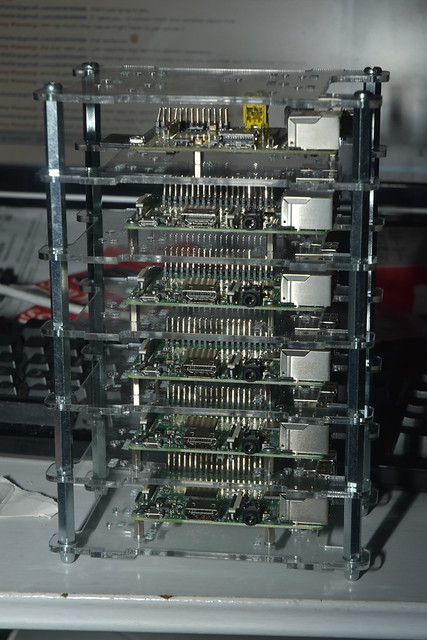

I got myself a bunch of Raspberry Pi 3s a short while ago:

...the idea being that I can use those to run a few experiments on. After the experiment, I would need to be able to somehow remove the stuff that I put on them again. The most obvious way to do that is to reimage them after every experiment; but the one downside of the multi-pi cases that I've put them in is that removing the micro-SD cards from the Pis without removing them from the case, while possible, is somewhat fiddly. That, plus the fact that I cared more about price than about speed when I got the micro-SD cards makes "image all the cards of these Raspberry Pis" something I don't want to do every day.

So I needed a different way to reset them to a clean state, and I decided to use ansible to get it done. It required a bit of experimentation, but eventually I came up with this:

---

- name: install package-list fact

hosts: all

remote_user: root

tasks:

- name: install libjson-perl

apt:

pkg: libjson-perl

state: latest

- name: create ansible directory

file:

path: /etc/ansible

state: directory

- name: create facts directory

file:

path: /etc/ansible/facts.d

state: directory

- name: copy fact into ansible facts directory

copy:

dest: /etc/ansible/facts.d/allowclean.fact

src: allowclean.fact

mode: 0755

- name: remove extra stuff

hosts: pis

remote_user: root

tasks:

- apt: pkg={{ item }} state=absent purge=yes

with_items: "{{ ansible_local.allowclean.cleanable_packages }}"

- name: remove package facts

hosts: pis

remote_user: root

tasks:

- file:

path: /etc/ansible/facts.d

state: absent

- apt:

pkg: libjson-perl

state: absent

autoremove: yes

purge: yes

This ansible playbook has three plays. The first play installs a custom

ansible fact (written in perl) that emits a json file which defines

cleanable_packages as a list of packages to remove. The second uses

that fact to actually remove those packages; and the third removes the

fact (and the libjson-perl library we used to produce the JSON).

Which means, really, that all the hard work is done inside the

allowclean.fact file. That looks like this:

#!/usr/bin/perl

use strict;

use warnings;

use JSON;

open PACKAGES, "dpkg -l|";

my @packages;

my %whitelist = (

...

);

while(<PACKAGES>) {

next unless /^ii\s+(\S+)/;

my $pack = $1;

next if(exists($whitelist{$pack}));

if($pack =~ /(.*):armhf/) {

$pack = $1;

}

push @packages, $1;

}

my %hash;

$hash{cleanable_packages} = \@packages;

print to_json(\%hash);

Basically, we create a whitelist, and compare the output of dpkg -l

against that whitelist. If a certain package is in the whitelist, then

we don't add it to our "packages" list; if it isn't, we do.

The whitelist is just a list of package => 1 tuples. We just need the

hash keys and don't care about the data, really; I could equally well

have made it package => undef, I suppose, but whatever.

Creating the whitelist is not that hard. I did it by setting up one raspberry pi to the minimum that I wanted, running

dpkg -l|awk '/^ii/{print "\t\""$2"\" => 1,"}' > packagelist

and then adding the contents of that packagelist file in place of the

... in the above perl script.

Now whenever we apply the ansible playbook above, all packages (except for those in the whitelist) are removed from the system.

Doing the same for things like users and files is left as an exercise to the reader.

Side note: ... is a valid perl construct. You can write it, and the

compiler won't complain. You can't run it, though.

... but that doesn't mean the work is over.

One big job that needs to happen after the conference is to review and release the video recordings that were made. With several hundreds of videos to be checked and only a handful of people with the ability to do so, review was a massive job that for the past three editions took several months; e.g., in 2016 the last video work was done in July, when the preparation of the 2017 edition had already started.

Obviously this is suboptimal, and therefore another solution was required. After working on it for quite a while (in my spare time), I came up with SReview, a video review and transcoding system written in Perl.

An obvious question that could be asked is why I wrote yet another system, though, and did not use something that already existed. The short answer to that is "because what's there did not exactly do what I wanted to". The somewhat longer answer also involves the fact that I felt like writing something from scratch.

The full story, however, is this: there isn't very much out there, and what does exist is flawed in some ways. I am aware of three other review systems that are or were used by other conferences:

- A bunch of shell scripts that were written by the DebConf video team

and hooked into the penta database. Nobody but DebConf ever used it.

It allowed review via an NFS share and a webinterface, and required

people to watch

.dvfiles directly from the filesystem in a media player. For this and other reasons, it could only ever be used from the conference itself. If nothing else, that final limitation made it impossible for FOSDEM to use it, but even if that wasn't the case it was still too basic to ever be useful for a conference the size of FOSDEM. - A review system used by the CCC "voc" team. I've never actually seen it in use, but I've heard people describe it. It involves a complicated setup of Samba servers, short MPEG transport stream segments, a FUSE filesystem, and kdenlive, which took someone several days to set up as an experiment back at DebConf15. Critically, important parts of it are also not licensed as free software, which to me rules it out for a tool in support of FOSDEM. Even if that wasn't the case, however, I'm still not sure it would be ideal; this system requires intimate knowledge of how it works from its user, which makes it harder for us to crowdsource the review to the speaker, as I had planned to.

- Veyepar. This one gets many things right, and we used it for video review at DebConf from DebConf14 onwards, as well as FOSDEM 2014 (but not 2015 or 2016). Unfortunately, it also gets many things wrong. Most of these can be traced back to the fact that Carl, as he freely admits, is not a programmer; he's more of a sysadmin type who also manages to cobble together a few scripts now and then. Some of the things it gets wrong are minor issues that would theoretically be fixable with a minimal amount of effort; others would be more involved. It is also severely underdocumented, and so as a result it is rather tedious for someone not very familiar with the system to be able to use it. On a more personal note, veyepar is also written in the wrong language, so while I might have spent some time improving it, I ended up starting from scratch.

Something all these systems have in common is that they try to avoid postprocessing as much as possible. This only makes sense; if you have to deal with loads and loads of video recordings, having to do too much postprocessing only ensures that it won't get done...

Despite the issues that I have with it, I still think that veyepar is a great system, and am not ashamed to say that SReview borrows many ideas and concepts from it. However, it does things differently in some areas, too:

- A major focus has been on making the review form be as easy to use as possible. While there is still room for improvement (and help would certainly be welcome in that area from someone with more experience in UI design than me), I think the SReview review form is much easier to use than the veyepar one (which has so many options that it's pretty hard to understand sometimes).

- SReview assumes that as soon as there are recordings in a given room sufficient to fill all the time that a particular event in that room was scheduled for, the whole event is available. It will then generate a first rough cut, and send a notification to the speaker in question, as well as the people who organized the devroom. The reviewer will then almost certainly be required to request a second (and possibly third or fourth) cut, but I think the advantage of that is that it makes the review workflow be more intuitive and easier to understand.

- Where veyepar requires one or more instances of per-state scripts to be running (which will then each be polling the database and just start a transcode or cut or whatever script as needed), SReview uses a single "dispatch" script, which needs to be run once for the whole system (if using an external scheduler) or once per core that may be used (if not using an external scheduler), and which does all the database polling required. The use of an external scheduler seemed more appropriate, given that things like gridengine exist; gridengine is a job scheduler which allows one to submit a job to be ran on any node in a cluster, along with the resources that this particular job requires, and which will then either find an appropriate node to run the job on, or will put the job in a "pending" state until the required resources can be found. This allows me to more easily add extra encoding capacity when required, and allows me to also do things like allocate less resources to a particular part of the whole system, even while jobs are already running, without necessarily needing to abort jobs that might be using those resources.

The system seems to be working fine, although there's certainly still room for improvement. I'm thinking of using it for DebConf17 too, and will therefore probably work on improving it during DebCamp.

Additionally, the experience of using it for FOSDEM 2017 has given me ideas of where to improve it further, so it can be used more easily by different parties, too. Some of these have been filed as issues against a "1.0" milestone on github, but others are only newly formed in my gray matter and will need some thinking through before they can be properly implemented. Certainly, it looks like this will be something that'll give me quite some fun developing further.

In the mean time, if you're interested in the state of a particular video of FOSDEM 2017, have a look at the video overview page, which lists all talks along with their review/transcode status. Also, if you were a speaker or devroom organizer at FOSDEM 2017, please check your mailbox and review your talk! With your help, we should hopefully be able to release all our videos by the end of the week.

Update (2017-02-06 17:18): clarified my position on the qualities of some of the other systems after feedback from people who were a bit disappointed by my description of them... and which was fair enough. Apologies.

Update (2017-02-08 16:26): Fixes to the c3voc stuff after feedback from them.